TL;DR - LAVA + openQA = automated, continuous kernel testing

Everyone wants the same thing: stable software for the long term, including the latest features. To achieve this, you need to have confidence in your software and in the software you depend on. This confidence has to come from testing.

But testing modern software platforms can be extremely complex and time consuming, and it’s easy to miss out on the latest fixes and features in Linux for fear of the time-consuming manual regression tests required.

Codethink always advocates staying as closely aligned to mainline as possible, limiting the overhead required to consume the latest upstream and benefit from the advances made there. But this is often not possible or avoided due to the burden of testing.

To solve this, we need continuous, automated and comprehensive testing. This blog post explains how we have developed a testing pipeline for the kernel, enabling automated boot tests in both QEMU and on hardware, as well as UI tests and the issuing of command line instructions to test responses.

Making use of Open Source Software

To build the testing pipeline we utilised openQA and LAVA. Both are focused on test automation and validation as part of a Continuous Integration development process. For an overview of each project, read on. If you already know the technology, feel free to skip to the next section: Kernel Testing Setup.

LAVA offers the ability to automate the deployment of operating systems onto both physical devices and virtual hardware. However, the support for actual hardware is more developed than for emulated devices. It focuses on providing a range of deployment and boot methods, rather than the tests themselves, so once an OS is deployed and logged into the device under test is achieved, the type & range of tests are only limited by what the tester chooses to execute.

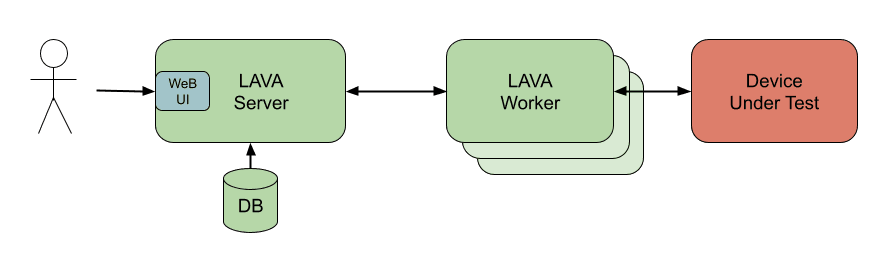

The architecture of a LAVA instance comprises of a single server component which can control one or multiple worker components. Contained within the server is a web interface, which can be used to issue test jobs, monitor the status of the tests, and retrieve the results of completed tests. A test job request is initially queued and the LAVA server's scheduler periodically checks the server's database for jobs and the current availability of appropriate test devices, allocating jobs to available workers whenever resources match requests. Inside the LAVA worker component is a dispatcher, which manages all the operations on the test device that arise from the parameters of the job request and also the device parameters provided by the LAVA server.

While LAVA focuses on the deployment and booting of the OS onto emulated or actual hardware, openQA is an automated test tool that allows for the possibility of testing the whole installation process of an OS. These tests can be used to check the output of the console and can also use OpenCV for fuzzy image matching of the screen, using a concept called needles. This can be used to determine if rendering occurs in the expected order and correctness. OpenQA also offers the ability to send keystrokes that could be used, for example, to progress the installation process with an appropriate input, or for entering passwords when required by the system.

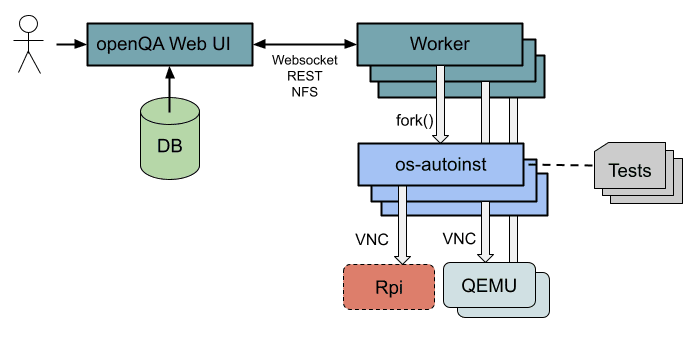

The architecture of openQA is similar to that of LAVA, in that it has a web interface that can be used to monitor the progress of submitted tests. It is also responsible for matching tests to available and appropriate machine resources, and assigning a job to a worker component, which interfaces with the openQA UI to collect data and input files that will be needed by the tests.

For each openQA worker there is an associated os-autoinst component which, in the case of an emulated machine, is responsible for the machine being created. It is also responsible for interacting with the machine under test, whether it is virtual or actual hardware, via a VNC connection, performing the actual tests and collecting the test results. These results include video of the machine's display as it is tested, along with screenshots of each test point and a detailed output log.

Setting up the Kernel Testing

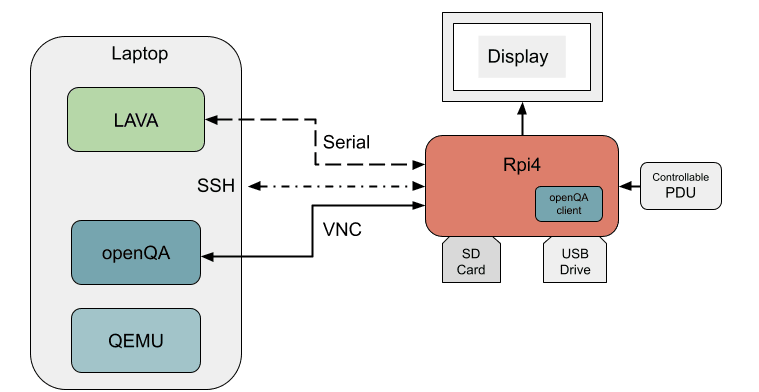

In order to investigate the possibilities of LAVA and openQA for testing a Linux kernel in both QEMU and actual hardware, we created a small test setup using a Raspberry Pi (Rpi4) and a laptop. The main aim of this test setup is to determine if a change in the kernel has any impact on the deployment of a Linux OS and how it functions. For our testing purposes we used Ubuntu 20.04 for the OS, but the general concept of the setup should be flexible enough to test other Linux distributions.

The openQA testing under QEMU is the simpler of the two testing scenarios, as openQA can be directly instructed to use a particular QEMU image, and the installation of the kernel under test can form part of the openQA tests.

For the hardware testing scenario, the Rpi4 is connected to the laptop via it's serial port, which will allow a LAVA instance running on the laptop to communicate with it. The LAVA instance is created from two Docker containers, one for the LAVA server and one for the LAVA worker. The Rpi4 has two connected storage devices, an SD card and a USB drive, which are for a base image and test image respectively. This configuration allows us to recover from any issue that arises during the testing, as the Rpi4 defaults to booting from the base image, which is never used during testing.

A LAVA job, as part of a deploy action, first checks that all the files needed to create a ramdisk for the Rpi4 exist and then downloads them to the LAVA dispatcher assigned to that job. Once the dispatcher has created the ramdisk, it begins a boot action, or more specifically, a U-Boot action. This entails the creation of a bootloader overlay specific for the board under test and then triggering a reboot of the Rpi4. Using a bootloader interrupt method from LAVA, it uses the previously created overlay to configure U-Boot and download the ramdisk to the Rpi4's memory. Once complete, the final command of the bootloader overlay makes it boot in to the test image on the USB drive, using the kernel in the ramdisk.

Once booted, the LAVA dispatcher sends the commands to log in to the Rpi4, and then runs a configuration script (that is already part of the test image, along with a configuration to enable screen sharing). Once the two devices are connected to the same network, the LAVA dispatcher triggers the running of a containerised image of an openQA client. This client requests that the openQA instance, running in the laptop, assign a worker/os-autoinst pair to perform a specific set of tests on the Rpi4. The openQA instance on the laptop is constructed from three Docker containers; for the UI, worker and supporting database.

In order to run the openQA tests on actual hardware, a new backend for the os-autoinst component of openQA needed to be created, i.e. one that only provides a VNC connection to the board under test, as there is now no need to create a virtual machine. This was a relatively simple task, as openQA's architecture allows for the creation of new backends, providing a generic code template.

From this point, the openQA testing part of the investigation is almost identical in both the case of QEMU and on actual hardware. The only difference is in the hardware case, where the LAVA dispatcher has control of the serial console. So in order to run script-based, non-graphical tests, on the hardware, a new openQA test 'distribution' (or distri) needs to be defined for the test group. This allows you to define the additional consoles you intend to use, which in our case was a 'serial over SSH' console.

Linux Kernel Testing Pipeline

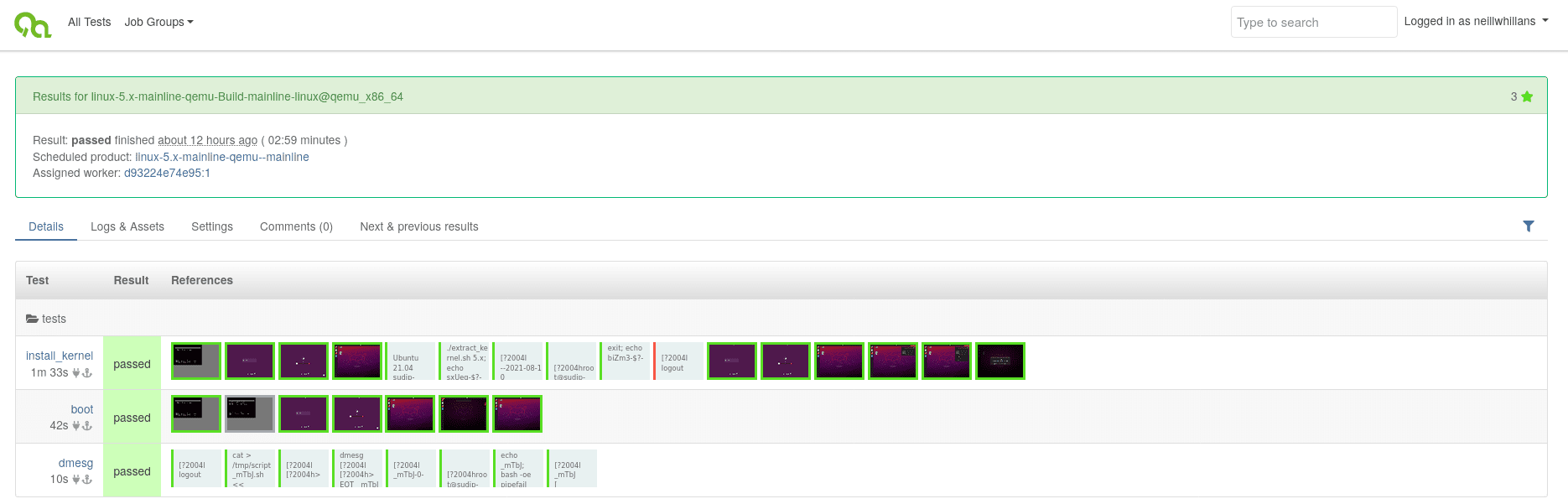

The combination of LAVA and openQA has proven successful in our implementation of a Linux kernel testing pipeline, with the results now available to view from our public facing openQA UI.

QEMU test of mainline kernel

QEMU test of mainline kernel

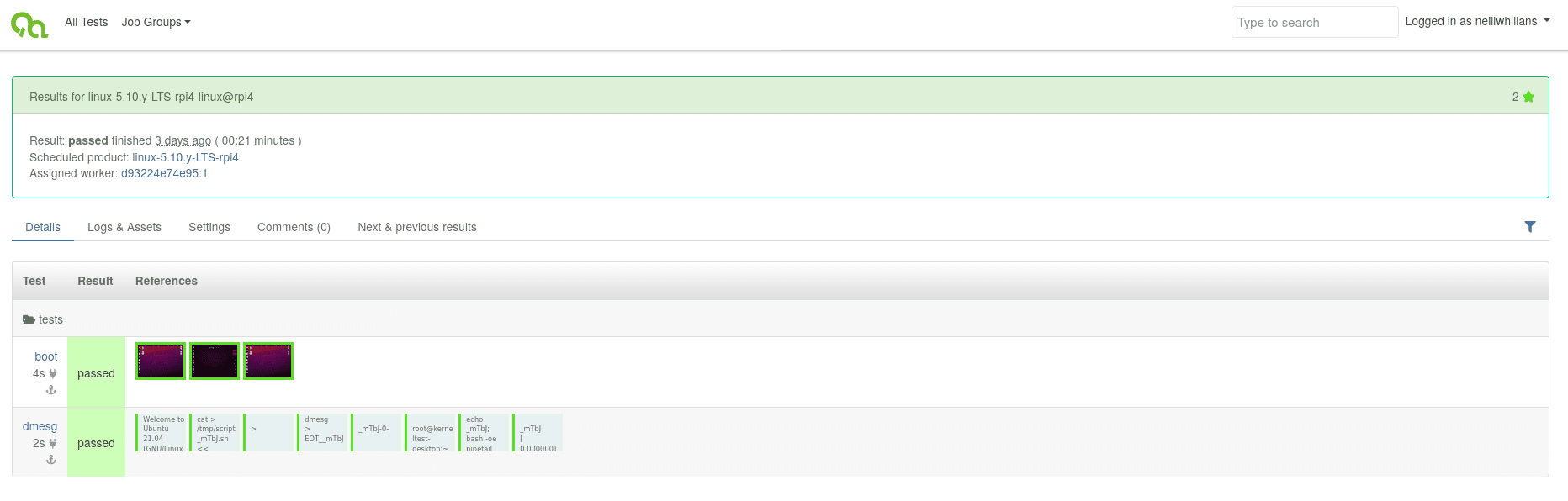

Hardware test of LTS kernel

Hardware test of LTS kernel

The pipeline means we’re now performing automated daily tests of the Linux mainline kernel in QEMU, and LTS kernels in both QEMU and hardware. The results of which form part of regular test reports provided to the Linux kernel's stable branch maintainers.

Whilst this is obviously not yet a comprehensive test suite in its own right, it is a start, and helps us to catch any major regressions as early as possible, stopping them ever being added to the kernel. We welcome any additions to the pipeline from other interested parties.

Next up, we plan to output the results of the test pipeline to the KernelCI project, and add more architectures to the setup, starting with RISC-V.

Stay up to date on our Long Term Maintainability news

Receive our recent articles about Long Term Maintainability in your email.

Related blog posts:

- OpenQA and LAVA for GNOME: Higher quality of FOSS: How we are helping GNOME to improve their test pipeline >>

- Aligning to the mainline Linux kernel: Why aligning with open source mainline is the way to go >>

Other Content

- Podcast: Embedded Insiders with John Ellis

- To boldly big-endian where no one has big-endianded before

- How Continuous Testing Helps OEMs Navigate UNECE R155/156

- Codethink’s Insights and Highlights from FOSDEM 2025

- CES 2025 Roundup: Codethink's Highlights from Las Vegas

- FOSDEM 2025: What to Expect from Codethink

- Codethink Joins Eclipse Foundation/Eclipse SDV Working Group

- Codethink/Arm White Paper: Arm STLs at Runtime on Linux

- Speed Up Embedded Software Testing with QEMU

- Open Source Summit Europe (OSSEU) 2024

- Watch: Real-time Scheduling Fault Simulation

- Improving systemd’s integration testing infrastructure (part 2)

- Meet the Team: Laurence Urhegyi

- A new way to develop on Linux - Part II

- Shaping the future of GNOME: GUADEC 2024

- Developing a cryptographically secure bootloader for RISC-V in Rust

- Meet the Team: Philip Martin

- Improving systemd’s integration testing infrastructure (part 1)

- A new way to develop on Linux

- RISC-V Summit Europe 2024

- Safety Frontier: A Retrospective on ELISA

- Codethink sponsors Outreachy

- The Linux kernel is a CNA - so what?

- GNOME OS + systemd-sysupdate

- Codethink has achieved ISO 9001:2015 accreditation

- Outreachy internship: Improving end-to-end testing for GNOME

- Lessons learnt from building a distributed system in Rust

- FOSDEM 2024

- QAnvas and QAD: Streamlining UI Testing for Embedded Systems

- Outreachy: Supporting the open source community through mentorship programmes

- Using Git LFS and fast-import together

- Testing in a Box: Streamlining Embedded Systems Testing

- SDV Europe: What Codethink has planned

- How do Hardware Security Modules impact the automotive sector? The final blog in a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part two of a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part one of a three part discussion

- Automated Kernel Testing on RISC-V Hardware

- Automated end-to-end testing for Android Automotive on Hardware

- GUADEC 2023

- Embedded Open Source Summit 2023

- RISC-V: Exploring a Bug in Stack Unwinding

- Adding RISC-V Vector Cryptography Extension support to QEMU

- Introducing Our New Open-Source Tool: Quality Assurance Daemon

- Achieving Long-Term Maintainability with Open Source

- FOSDEM 2023

- PyPI Security: How to Safely Install Python Packages

- BuildStream 2.0 is here, just in time for the holidays!

- A Valuable & Comprehensive Firmware Code Review by Codethink

- GNOME OS & Atomic Upgrades on the PinePhone

- Flathub-Codethink Collaboration

- Codethink proudly sponsors GUADEC 2022

- Tracking Down an Obscure Reproducibility Bug in glibc

- Full archive