As part of our work to create a trustable and safe reference Linux distribution we need to make sure that the artifacts of our build process are reproducible - that if we repeat a build, then we get the exact same results as the first time, regardless of the host environment. If our builds are not reproducible, then the safety of a given build cannot be verified, as we can't even confirm that the source code corresponds to the binaries.

Our builds are performed using BuildStream, a meta-build tool targeting a similar problem space to Bitbake or Buildroot - that is to create entire system images from a variety of projects. BuildStream builds are sandboxed using Bubblewrap, which does a relatively good job of isolating the build environment from the host environment, making the environment (mostly) consistent between machines.

We verify the reproducibility of our builds in a GitLab pipeline, which builds our distribution twice and compares the outputs bit-for-bit. After changing runners in GitLab, we noticed that this test no longer passed. This implied that our builds were not reproducible between machines after all! The changes between the machines were innocuous enough - a bump to the OS version, a move from a physical machine to a virtual machine, and a switch from an Intel processor to one from AMD (notably, these use the same Instruction Set Architecture (ISA)). BuildStream's sandboxing capabilities means that none of these changes should be affecting the reproducibility of our project. Things began to look interesting.

Finding the Source

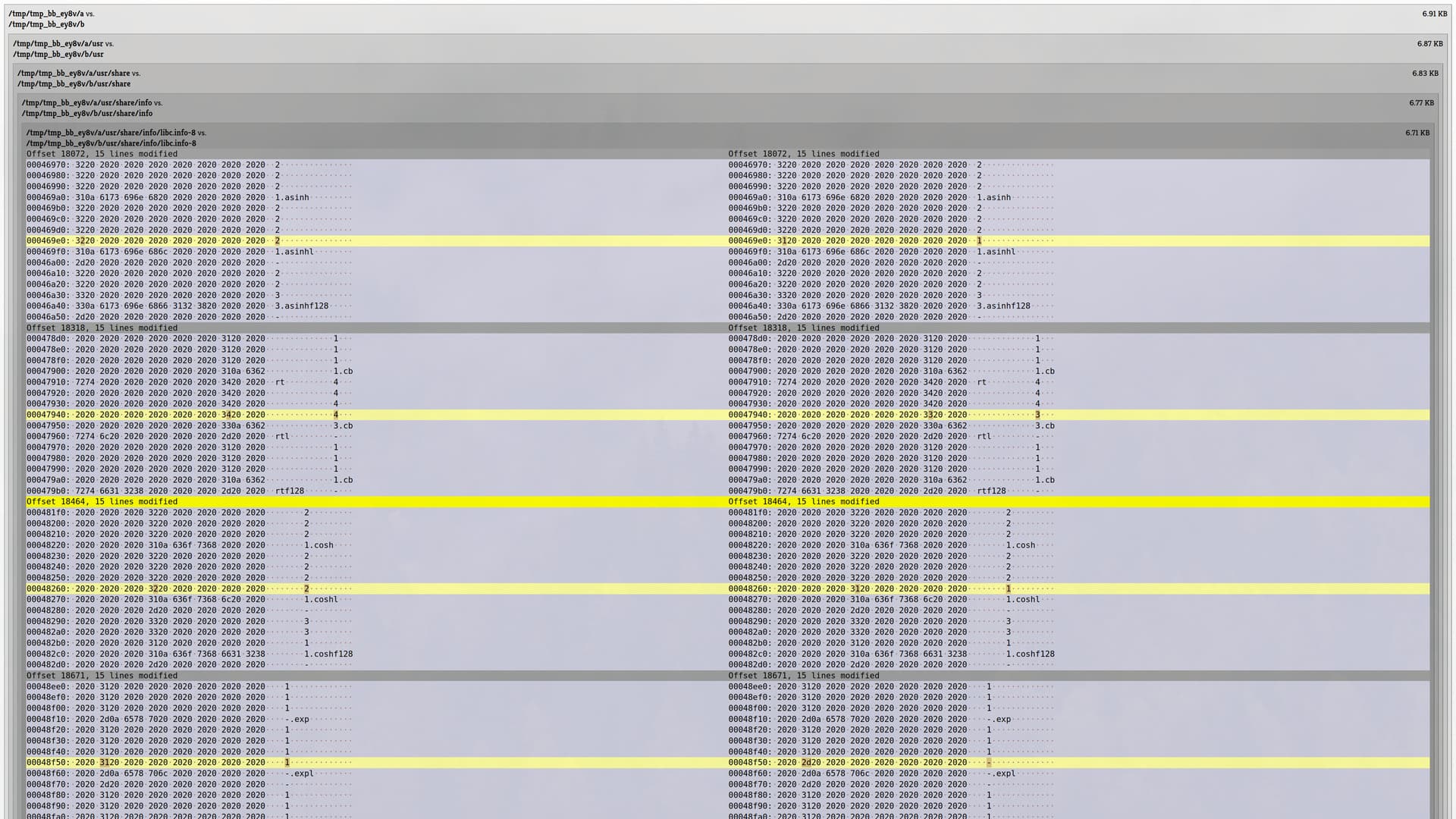

Our reproducibility tests were flagging

glibc as the component that had changed

between builds, with the differences being in one of the pages of the manual:

libc.info-8. From the diffoscope output below, we

can see that the changes appear to be in some sort of table involving

mathematical functions. If we crack open a local copy of the file, then we find

the likely culprit is a table entitled Known Maximum Errors in Math Functions,

which can also be viewed

online.

This table holds per-architecture information about the maximum possible error in functions from libm. By the nature of floating point numbers (and modelling infinitely many things in finitely many ways in general) there are unavoidably errors in the computations done when computers do mathematics. Generally computers use approximations or numerical methods to calculate the value of a mathematical function, which means there is an error. Different floating point units provide different error values. This table records these errors in a unit called "units of the last place", which is frequently shortened to "ulps" in the glibc codebase.

If we inspect the diff more closely, a pattern becomes clear - the changes seem to be to the same column in the table. The table is split into several 5-column chunks, and our offender is the 4th in one such chunk. We can jump to the offset of the changed value in a local copy of the artifact to find the architecture causing issues. RISC-V.

Hunting the Bug

The next logical step is figuring out how the table is generated - it seems odd

that we're getting different results for RISC-V on two x86-64 systems! After a

bit of digging through TeX files and Makefiles, we are led to a python

script

that generates the table. Instead of calculating the errors on every build, the

results are stored in the glibc repository statically and updated periodically.

The values for a given architecture can be found in an architecture-specific

directory in a file called libm-test-ulps. Beside each of these is a

libm-test-ulps-name file containing the name of the architecture as presented

in the table heading.

When generating the table, these libm-test-ulps files are parsed by the python

script and placed into a python dictionary, which is keyed by the contents of

libm-test-ulps-name. This means that if there's a collision in the names of

two architectures, then one could overwrite the other! In the RISC-V architecture

directory we can find that both sysdeps/riscv/rv32/rvd/libm-test-ulps-name and

sysdeps/riscv/rv64/rvd/libm-test-ulps-name contain RISC-V. This looks to be

the source of the inconsistency, but why did it only appear when we changed our

GitLab runner?

The answer to this is in how the libm-test-ulps files are found - the script

calls Python's os.walk() method to traverse the directory tree, but the order

of the results can't be relied upon. os.walk() works by calling readdir(3)

repeatedly, but the order of directory entries returned is dependent upon the

filesystem implementation. You can easily view the order given by readdir by

using ls -f!

[coldtom@osgiliath scratch]$ ls -a

. .. a b c d e f g h i j k l m n o p q r s t u v w x y z

[coldtom@osgiliath scratch]$ ls -f

k q x n l a e s b t m . j r y c i h d o p f u z v g w ..

But why now?

At this point, we were satisfied that the issue was due to this, and that the correct fix was to patch glibc to avoid the collision, but we could still go a level deeper. What differences in the filesystems of the two machines led to the discrepancy?

Both machines were using an ext4 filesystem, so the source of the difference could have been either some change to the kernel due to the OS bump, or some fundamental facet of the ext4 implementation meaning that ordering is not consistent between two different ext4 filesystems. We can check the kernel changes for any likely candidates by looking through the git commit log for the ext4 module, but between the two Debian versions there were no such commits.

It turns out that the ordering of readdir for ext4 filesystems depends on

several factors, but in the typical case (the one we had), ext4 directory

listings are represented by a tree keyed on hashes of the entry filenames. The

big caveat is that the hash function is seeded by a filesystem-wide random seed

to make these hashes unique between filesystems! This results in the behaviour

that we observed - consistent ordering on a single filesystem, but a different

filesystem showing a different order.

Conclusion

The final resolution of the bug was a trivial

patch to

glibc to separate the two variants - simply modifying the libm-test-ulps-name

values to include a reference to the word size of the architecture. That is

RISC-V 32-bit and RISC-V 64-bit.

This whole experience was a good example of how one can take a bug as a learning

opportunity! In hunting down this relatively benign (in the end) bug, I gained

knowledge on floating point mathematical functions, a wariness for functions

that may call readdir and a greater appreciation for the ext4 filesystem. A

more practical takeaway is that reproducibility is hard to achieve even when

using appropriate tooling, and that it's easy to overlook factors that affect

project reproducibility when testing.

Other Content

- Podcast: Embedded Insiders with John Ellis

- To boldly big-endian where no one has big-endianded before

- How Continuous Testing Helps OEMs Navigate UNECE R155/156

- Codethink’s Insights and Highlights from FOSDEM 2025

- CES 2025 Roundup: Codethink's Highlights from Las Vegas

- FOSDEM 2025: What to Expect from Codethink

- Codethink Joins Eclipse Foundation/Eclipse SDV Working Group

- Codethink/Arm White Paper: Arm STLs at Runtime on Linux

- Speed Up Embedded Software Testing with QEMU

- Open Source Summit Europe (OSSEU) 2024

- Watch: Real-time Scheduling Fault Simulation

- Improving systemd’s integration testing infrastructure (part 2)

- Meet the Team: Laurence Urhegyi

- A new way to develop on Linux - Part II

- Shaping the future of GNOME: GUADEC 2024

- Developing a cryptographically secure bootloader for RISC-V in Rust

- Meet the Team: Philip Martin

- Improving systemd’s integration testing infrastructure (part 1)

- A new way to develop on Linux

- RISC-V Summit Europe 2024

- Safety Frontier: A Retrospective on ELISA

- Codethink sponsors Outreachy

- The Linux kernel is a CNA - so what?

- GNOME OS + systemd-sysupdate

- Codethink has achieved ISO 9001:2015 accreditation

- Outreachy internship: Improving end-to-end testing for GNOME

- Lessons learnt from building a distributed system in Rust

- FOSDEM 2024

- QAnvas and QAD: Streamlining UI Testing for Embedded Systems

- Outreachy: Supporting the open source community through mentorship programmes

- Using Git LFS and fast-import together

- Testing in a Box: Streamlining Embedded Systems Testing

- SDV Europe: What Codethink has planned

- How do Hardware Security Modules impact the automotive sector? The final blog in a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part two of a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part one of a three part discussion

- Automated Kernel Testing on RISC-V Hardware

- Automated end-to-end testing for Android Automotive on Hardware

- GUADEC 2023

- Embedded Open Source Summit 2023

- RISC-V: Exploring a Bug in Stack Unwinding

- Adding RISC-V Vector Cryptography Extension support to QEMU

- Introducing Our New Open-Source Tool: Quality Assurance Daemon

- Achieving Long-Term Maintainability with Open Source

- FOSDEM 2023

- PyPI Security: How to Safely Install Python Packages

- BuildStream 2.0 is here, just in time for the holidays!

- A Valuable & Comprehensive Firmware Code Review by Codethink

- GNOME OS & Atomic Upgrades on the PinePhone

- Flathub-Codethink Collaboration

- Codethink proudly sponsors GUADEC 2022

- Full archive