Paul Albertella presented at the European STAMP Conference and Workshop how Codethink has been using STPA for risk analysis of software-intensive systems and integrating its outputs into the engineering process as part of our new approach to safety. In this article, he explores the background and topics of his talk in more detail.

You can see the full talk here:

Background

Modern safety-related applications such as automated driving are being created using systems that are increasingly complex, with multi-core microprocessors, co-processors and dedicated sensor controllers. Such systems also incorporate a multitude of software components, which may involve hundreds of thousands of lines of code for firmware alone, and many millions for multi-threaded applications running on a full-scale OS. Furthermore, the management, construction, integration and verification of this system-level software involves yet more software: a dizzying array of tools and dependency packages.

Functional safety standards have historically made assumptions about the software used to create safety-related systems, which do not readily apply to such software-intensive systems and ecosystems. Precisely-specified behaviour for all execution paths may not be a given, since complex systems may exhibit non-deterministic behaviour, or exploit it as part of their design. Much of the software involved in such systems is pre-existing and generic, which may not have been developed with functional safety in mind, and may have complex or loosely-controlled supply chains for its dependencies.

This is especially true for free and open source software (FOSS), most of which is explicitly provided without warranty for a particular purpose, and lacks the formally-specified requirements and design that are the foundation of a traditional software engineering process. FOSS projects may have coherent and sophisticated development processes, but they tend to be operated by communities rather than corporations, with a different philosophy of engineering to that described in the reference processes expected by safety standards.

Without the confidence traditionally provided by conformance to such a process, and in the absence of the warranty and other assurances that may be provided for a commercial software product, how can we be confident about using such software to create a safety-related system?

Bridging the specification gap

One way to address this question is to remedy the lack of formal specification material for FOSS components for a general context by documenting a specific one: their role in achieving a given system's safety goals.

This is not a novel strategy. ISO 26262 describes methods for qualifying pre-existing software components as part of a larger safety-related system by applying similar principles (part 8, section 12). However, this is primarily intended to facilitate the re-use of software elements from previously-qualified or in-use systems, or libraries provided by commercial off-the-shelf software; hence the qualification criteria remain difficult to apply to FOSS.

IEC 61508 describes a similar qualification approach, which includes a route (Route 3) for "assessment of non-compliant development" (part 3, 7.4.2.13), which requires (amongst other criteria) that the pre-existing software be "documented to the same degree of precision" as specified for software developed using a compliant process, and that it has been subjected to "verification and validation using a systematic approach with documented testing and review of all parts of the element’s design and code".

Following this route, we could theoretically document our own requirements for the FOSS components, document our inferred design specification based on our analysis of the code, and then develop a complete set of verification measures to demonstrate that they satisfy our requirements. However, this remains problematic for three reasons.

Firstly, the effort involved in performing this kind of specification and verification for all of the FOSS components involved in a given system (especially for a Linux-based OS), is potentially huge and difficult to quantify, which makes product developers and system integrators understandably reluctant to undertake it.

Secondly, completing this process may be of limited value for those portions of the software that are unused or irrelevant for our particular system. Unused portions might include optional or hardware-specific features that are not even compiled into our version of the component. Irrelevant portions might include those relating to use cases that do not apply for our system, or which cannot have any impact on its safety goals.

Finally, our completed specification and verification measures might only be applicable for a single 'snapshot' of the FOSS that we have used. Unless we could somehow persuade the relevant FOSS developer community to adopt and maintain our specification, and follow formal software engineering processes to give us confidence in its ongoing relevance and accuracy, we would have to maintain it ourselves.

It is this need for potentially huge, possibly unquantifiable and likely repeated effort that has historically made the use of FOSS in safety-related systems appear uneconomic. To change this, we would need a way to constrain the scope of analysis, specification and verification, without compromising safety, and a safe and economic way to maintain the assets that we produce for future versions of our FOSS components. In an ideal world, these assets would also be reusable and - given the fundamental philosophy of FOSS - available for others to use, refine and extend.

An STPA-based approach

The approach to these problems that Codethink has been developing as part of our work on Linux is called RAFIA (Risk Assessment, Fault Injection and Automation). The risk assessment part of this is based on the System-Theoretic Process Analysis (STPA) methodology created by MIT. By applying its techniques as part of the safety workflow for a system (an Item or SEooC in ISO 26262 terms) incorporating FOSS components, we believe that it is possible to:

- Document the system's scope and safety goals clearly for all stakeholders

- Specify the functions that FOSS components are to provide within the system

- Characterise the risks that these functions entail with respect to the system's safety goals

- Specify how these risks are to be mitigated by:

- design features of the FOSS components

- verification measures for the FOSS components

- safety measures assigned to other elements

- Derive detailed safety requirements for FOSS components, which can be used to verify their assigned functions and safety measures

- Derive safety requirements for other parts of the system, which can be used to verify the risk mitigations that they must provide

- Derive fault injection strategies for FOSS components and the wider system, which can be used to validate these verification and mitigation measures

- Derive stress testing strategies, to validate the sufficiency of the identified safety measures

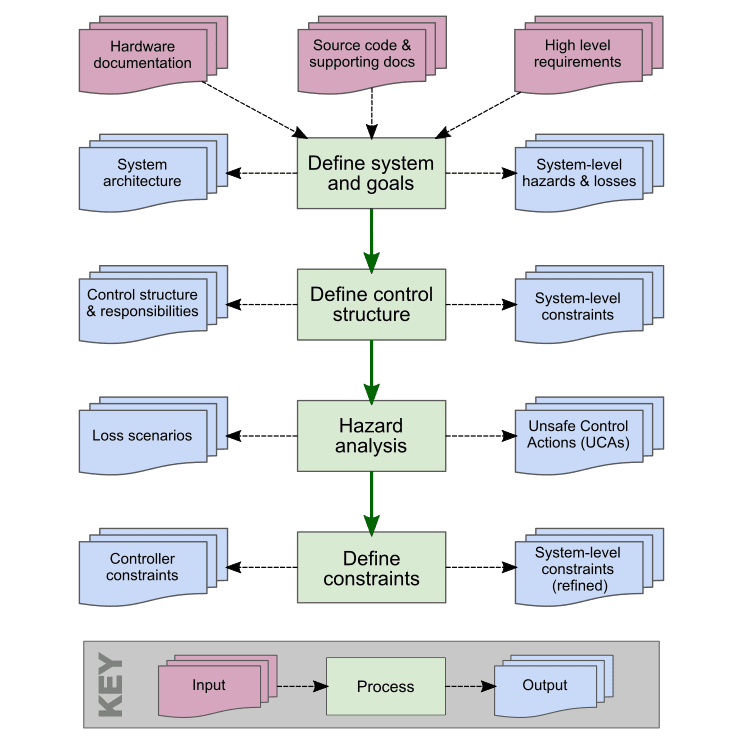

This approach builds directly on the analytical stages and documentation outputs of the STPA methodology, and uses these to drive the required software and system engineering processes as follows:

- The safety goals for the system are specified as system-level constraints, which are specified by reference to the losses and system-level hazards that they are intended to prevent or mitigate

- The functions of components are modelled in control structure diagrams, and their specific role in implementing the system-level constraints are specified as controller responsibilities.

- Risks are identified, analysed and documented using unsafe control actions and loss scenarios

- Safety requirements and mitigations that FOSS components are responsible for implementing are specified as controller constraints

- Safety requirements and mitigations for other components of the system are also specified using controller constraints, or by refining the defined system-level constraints and responsibilities

Integrating STPA into an engineering process

An important point to note is that these analytical and requirement definition activities will almost invariably need to be undertaken iteratively.

New risks may be identified at any stage, and may be introduced by the safety measures adopted to mitigate other risks. Furthermore, new or modified risks, or refinements to the system's control structure(s) may be identified during the verification and validation phases that follow. It is therefore essential to both plan the phases of development accordingly, and to manage the STPA outputs as part of the overall engineering process.

Codethink's approach to this is to store this documentation under revision control alongside the source code and other documentation, using a git repository management tool such as Gitlab or GitHub. This allows us to capture the history and evolution of the documents, as well as comments made by reviewers and evidence that they have been addressed, and to link them to related materials, such as tests, design documents or usage requirements in a safety manual.

These documents will form part of the specification for the system and the software components, and hence they need to be subject to change control. Managing documentation in the same way as source code enables a coordinated approach. Documents can be made visible to all stakeholders to provide a common understanding, and it should always be clear which version of the specification is current and approved. We can also use the change control process to trigger impact analysis when part of a specification changes.

However, a specification of this type, which drives the engineering process, is less useful if stored in a form that is only optimised for human consumption, such as a word processor document or a spreadsheet. To integrate it successfully into the engineering process, it is best to record these results using a structured data representation. This allows the information to be consumed and processed by other tools, as well as allowing us to generate formatted versions for human consumption. We can also define a schema and syntax rules for our data representation, to promote consistency and avoid ambiguity.

As part of our ongoing work with STPA, Codethink have defined and documented a set of data structures in YAML (see sample above) to represent all of the STPA outputs, and a set of Python scripts to validate this 'schema' and produce simple 'human-readable' reports in Markdown. These, together with samples based on the examples in the STPA Manual, will be shared in an open source project in due course. Please sign up using the box at the end of this article to be notified when this is available.

By managing these YAML documents in our revision control system, and using them as part of the continuous integration (CI) workflow for the related source code and documentation projects, we can:

- Make use of the same review and approval systems, to ensure that we have a consistent and coordinated process

- Automatically generate human-readable versions of documents in a variety of formats for review

- Verify changes to STPA outputs to ensure that they follow the defined syntax and schema rules (e.g. every UCA must reference a hazard)

- Reject changes if these checks fail, to ensure that the 'main' branch of the repository only contains verified and approved revisions of the documents

- Identify gaps in the analysis (e.g. does every UCA have an associated controller constraint?)

- Trigger impact analysis for new or modified elements (e.g. control actions) where appropriate

- Provide traceability reports to identify where our safety requirements (constraints) have corresponding tests to verify them, or a dependency on external safety measures to mitigate them

- Link these checks and reports to milestone criteria for e.g. a release candidate or review by a safety assessor

This automation of the processes relating to the safety analysis, alongside the construction and verification of the software, provides us with the final A in RAFIA.

Applying the RAFIA methodology: Deterministic Construction Service (DCS)

STPA enables us to specify the safety requirements for FOSS components in the context of our system, and to determine how these are to be verified and validated. However, if we are to have confidence in our FOSS components on the basis of this analysis, we also need to have confidence in the tools and processes that we use to construct and verify them, as well as the specific revisions and configurations of all of the inputs involved.

Codethink has developed a reference process to achieve this, and a tool design pattern called Deterministic Construction Service (DCS) to continuously verify it. We completed a reference implementation of this design pattern using our reference process, based on Open Source tooling managed within Gitlab. This was recently assessed by Exida against the ISO 26262 standard and certified as a qualified tool for integrity levels up to ASIL D.

A key goal of this work was to apply the RAFIA methodology to a concrete safety use case and validate its suitability in a formal certification process. We will be presenting more detailed information about DCS and its certification at the ELISA Workshop in November, including the role that STPA has played in this approach.

Stay up-to-date about safety-critical software

Fill the form and receive our latest updates about Safety in your inbox, including our STPA Tools and YAML examples.

Related blog posts:

- Request our white paper "Safety of Software-Intensive Systems From First Principles": A new approach to software safety >>

- Applying functional safety techniques to software-intensive systems: Safety is a system property, not a software property >>

Other Content

- Adding big‑endian support to CVA6 RISC‑V FPGA processor

- Bringing up a new distro for the CVA6 RISC‑V FPGA processor

- Externally verifying Linux deadline scheduling with reproducible embedded Rust

- Engineering Trust: Formulating Continuous Compliance for Open Source

- Why Renting Software Is a Dangerous Game

- Linux vs. QNX in Safety-Critical Systems: A Pragmatic View

- Is Rust ready for safety related applications?

- The open projects rethinking safety culture

- RISC-V Summit Europe 2025: What to Expect from Codethink

- Cyber Resilience Act (CRA): What You Need to Know

- Podcast: Embedded Insiders with John Ellis

- To boldly big-endian where no one has big-endianded before

- How Continuous Testing Helps OEMs Navigate UNECE R155/156

- Codethink’s Insights and Highlights from FOSDEM 2025

- CES 2025 Roundup: Codethink's Highlights from Las Vegas

- FOSDEM 2025: What to Expect from Codethink

- Codethink/Arm White Paper: Arm STLs at Runtime on Linux

- Speed Up Embedded Software Testing with QEMU

- Open Source Summit Europe (OSSEU) 2024

- Watch: Real-time Scheduling Fault Simulation

- Improving systemd’s integration testing infrastructure (part 2)

- Meet the Team: Laurence Urhegyi

- A new way to develop on Linux - Part II

- Shaping the future of GNOME: GUADEC 2024

- Developing a cryptographically secure bootloader for RISC-V in Rust

- Meet the Team: Philip Martin

- Improving systemd’s integration testing infrastructure (part 1)

- A new way to develop on Linux

- RISC-V Summit Europe 2024

- Safety Frontier: A Retrospective on ELISA

- Codethink sponsors Outreachy

- The Linux kernel is a CNA - so what?

- GNOME OS + systemd-sysupdate

- Codethink has achieved ISO 9001:2015 accreditation

- Outreachy internship: Improving end-to-end testing for GNOME

- Lessons learnt from building a distributed system in Rust

- FOSDEM 2024

- QAnvas and QAD: Streamlining UI Testing for Embedded Systems

- Outreachy: Supporting the open source community through mentorship programmes

- Using Git LFS and fast-import together

- Testing in a Box: Streamlining Embedded Systems Testing

- SDV Europe: What Codethink has planned

- How do Hardware Security Modules impact the automotive sector? The final blog in a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part two of a three part discussion

- How do Hardware Security Modules impact the automotive sector? Part one of a three part discussion

- Automated Kernel Testing on RISC-V Hardware

- Automated end-to-end testing for Android Automotive on Hardware

- GUADEC 2023

- Embedded Open Source Summit 2023

- RISC-V: Exploring a Bug in Stack Unwinding

- Adding RISC-V Vector Cryptography Extension support to QEMU

- Introducing Our New Open-Source Tool: Quality Assurance Daemon

- Achieving Long-Term Maintainability with Open Source

- FOSDEM 2023

- PyPI Security: How to Safely Install Python Packages

- BuildStream 2.0 is here, just in time for the holidays!

- Full archive